Tales of FPGA - Road to SDRAM - E04

Posted on May 5, 2024 in hacks

This post is the fourth one of a serie:

- Episode 1 presents what FPGA are and how to control them

- Episode 2 highlights the importance of time in FPGAs

- Episode 3 presents the different types of RAM

The ultimate goal of this serie of posts is to provide a simple working example of a design that can reliably access the SDRAM chip on a Digilent Arty S7 FPGA development board.

And now, the time has come to actually try to implement access to that SDRAM chip. Of course there is the easy way: Xilinx provides an IP, the MIG (Memory Interface Generator), that just does it. OK, its documentation is about 600 pages so maybe "just" here is a gross simplification, but nevertheless.

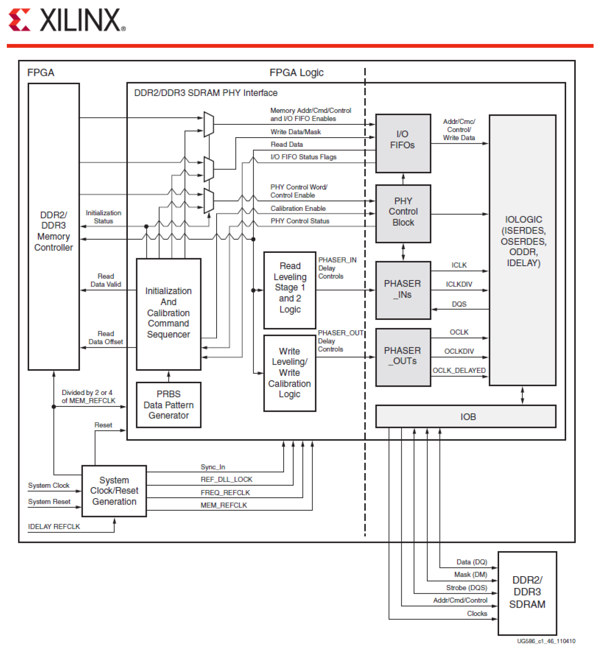

It essentially generates a module that, to keep things simple, expose an address bus, a data bus, and a few control lines. On the diagram below, the block to the left is you, the user. The block on the right is the SDRAM chip. The block in the middle is magic.

By carefully following the rules, I managed to produce a complete design that can write-then-read-back data to/from the SDRAM, and blink a LED if everything is OK. Done.

However, (a) that IP is very generic and therefore has latencies introduced here and there. In other words, it is not giving you access to the SDRAM at the full speed it could support. And of course, (b) it is not fun.

Which takes us to the alternative: read the DDR3 protocol specifications, and implement them. Sorry to disapoint, but I am not going to enter into the details of DDR3 here. It is quite hairy and well documented. Google is your friend.

Let us just say that the protocol is mostly serial, so serialization and de-serialization of the data bus is involved. Reading one address implies sending a stream of commands to select the correct bank and row, then receiving the value.

It took me quite some time (and more), but I managed to implement a complete memory controller that Vivado would happily build and simulate. As in: we can observe the lines going to the (simulated) SDRAM chip change according to the specs, the chip behave just as it should, data goes in and out.

In the simulator.

#Fail

Once I tried to synthetize and implement the design on silicon, things turned pretty ugly. Vivado complained about timing issues. Lots of timing issues. As in, thousands of them. There was not a single part of the design that did not make it cringe.

Of course, sending the design to the actual physical board plainly failed to work and, as we have explained before, there is little we can observe. No debugger, nothing. Only this: it works on paper, it works in the simulator, it fails in Real Life.

Bummer. My idea was to get it to work, and sort of brag about how, yes, I have been able to make it work. Unfortunately (and that explains the delay between this article and the previous one) I must face reality: I failed.

Of course I wondered how Xilinx thought we were supposed to access the SDRAM chip.

The Black Box from Hell

So I started to break that black box open, in order to learn what magic tricks allowed it to work. And here is what I learned: SDRAM clocks are fast, as in, above 600MHz. And at that kind of speed, everything matters. If you have an 8-bit bus to the SDRAM chip, the actual placement of the logic controlling each bit on the FPGA chip makes a difference. Even the length of the path between the FPGA chip and the SDRAM chip matters. Oh, even the temperature of the SDRAM chip has an impact, and you are supposed to monitor it and re-calibrate everything when it changes.

And Xilinx's IP is a powerfull wizard indeed, which not only generates Verilog code, but also additional files that position each specific gate and component at a precise place on the die, and constantly monitors the temperatures, the delays, etc. and adjust for them.

There is no way I want to understand, nor replicate, all this stuff. I don't really want to deal with the physical world—I am happy with the logical one. Plus, it is kind of a moot exercise that Xilinx engineers have already solved.

And yet, it is frustrating.

Enters the PHY

I wanted to find some sort of in-between solution, which would give me control over the DDR3 protocol, yet deal with all the physical layer for me. And it turned out that Xilinx design sort of provides this. Internally, the black box is a pipeline of smaller black boxes:

And it turns out that the box named "PHY Interface" on this diagram implements precisely this: the complete control of the nitty-gritty of the physical layer, plenty of components such as "phasers" which are used to keep multiple signals in sync when for instance temperature changes would cause them to drift apart.

Interesting for sure, but way too close to the physical world for my liking.

Extracting the PHY layer from the global MIG IP is not an easy task, as it seems it was never meant (by Xilinx engineers) to be extracted for real. Oh, and the code is not exactly as clean as the diagram presents it, and even figuring out what is or is not in the PHY is not obvious.

Nevertheless, this has allowed me to produce a module that can reliably read and write commands and data to the SDRAM chip, as fast as the DDR3 protocol allows, and nothing more. A bit more work let me re-wire my original memory controller on top of this module, and voila, it all worked.

TADA!

And no, I will not describe it here. It would be pointlessly complex, and probably of no interest if you do not plan to use it yourself. That being said, the entire code is available on my GitHub.

Now what?

The reason for all this exercise is that I have implemented, on the FPGA, a SOC (system-on-chip) composed of a custom 8-bit CPU loosely extrapolated from the 6502/6809 family of chips, along with 64KB of RAM, VGA, and PS/2 keyboard.

It works quite well but currently only runs within its 64KB of RAM, which may not be enough if I want to, say, implement a TCP network stack and a small HTTP server. Having access to the full 256MB of the SDRAM chip would certainly help.

Hence our next challenge: how does an 8-bit CPU with a 16-bit address bus (i.e. a 64KB address space) handle a 256MB memory space in a nice and efficient way? Want to read about MMUs (memory management units), memory paging and banking, segments? Stay tuned!

There used to be Disqus-powered comments here. They got very little engagement, and I am not a big fan of Disqus. So, comments are gone. If you want to discuss this article, your best bet is to ping me on Mastodon.